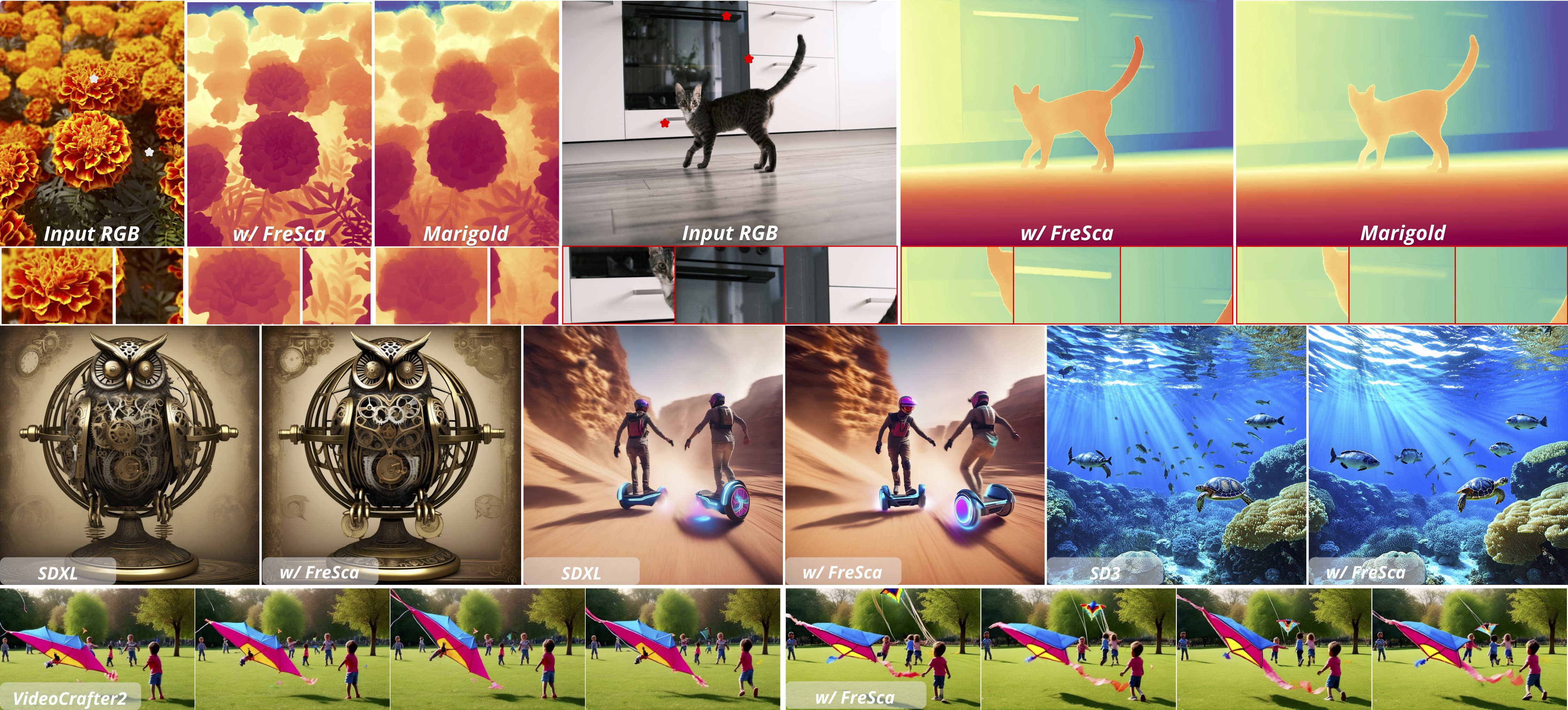

FreSca is a frequency-domain scaling technique that provides a zero-shot performance boost to diffusion-based applications like depth estimation and image editing without requiring retraining.

FreSca significantly enhances the quality of text-to-video generation by improving visual fidelity, detail, and consistency throughout the video frames.

FreSca enhances video generation quality without model retraining, improving fidelity, detail, and visual consistency by applying frequency-space scaling to the diffusion process.

FreSca enhances depth prediction by applying frequency-domain scaling to enhance detail clarity in high-frequency components.

Given a diffusion model, it naturally incorporates two key components: (1) the noise predictions $\epsilon$ which capture the semantics of the image, and (2) the classifier-free guidance $\omega$ which scales these noise predictions to amplify or suppress specific semantic features. FreSca leverages this scaling space by applying frequency-dependent scaling factors to different components of the model's noise predictions.

Given a noisy image $x_t$ at timestep $t$, we first calculate the noise prediction difference $\Delta\epsilon_t$ between the conditional and unconditional predictions. This difference captures the semantic signal. To manipulate this signal in the frequency domain, we apply a Fast Fourier Transform (FFT) to decompose $\Delta\epsilon_t$ into its spectral components. We then apply separate scaling factors: $l$ for low-frequency components (which control overall structure) and $h$ for high-frequency components (which control details and textures). After frequency-domain scaling, we apply the inverse FFT to obtain the modified noise prediction, which is used in the standard diffusion sampling equation to produce $x_{t-1}$. This simple yet effective approach allows precise control over different aspects of the generated content without requiring model retraining.